使用循环长短期记忆网络的文本生成

本文将演示如何通过构建循环长短期记忆网络来构建文本生成器。训练网络的概念过程是首先向网络提供网络正在训练的文本中存在的每个字符到唯一数字的映射。然后将每个字符热编码为一个向量,该向量是网络所需的格式。

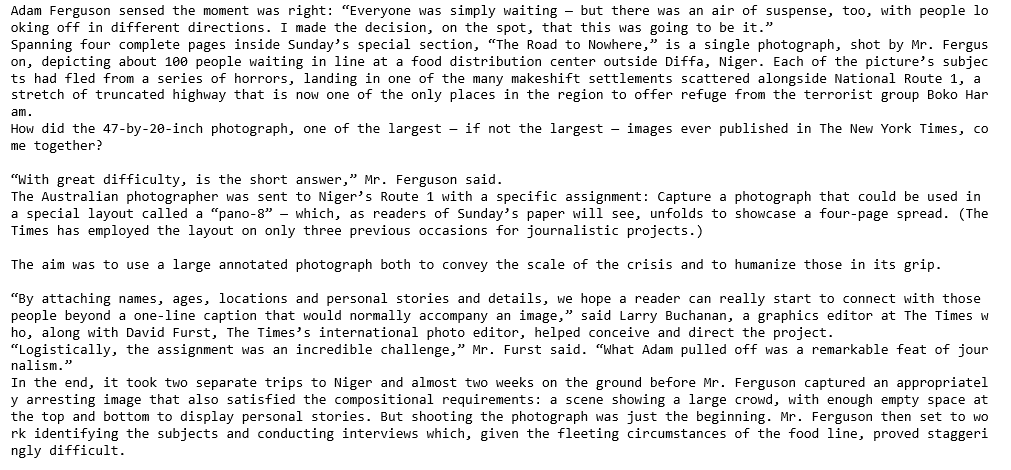

所述程序的数据是从 Kaggle 下载的。该数据集包含 2017 年 4 月至 2018 年 4 月在《纽约时报》上发表的文章。按发表月份划分。数据集采用 .csv 文件的形式,其中包含已发表文章的 url 以及其他详细信息。为训练过程选择了任意一个随机 url,然后在访问该 url 时,文本被复制到一个文本文件中,该文本文件用于训练过程。

第 1 步:导入所需的库

Python3

from __future__ import absolute_import, division,

print_function, unicode_literals

import numpy as np

import tensorflow as tf

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.layers import LSTM

from keras.optimizers import RMSprop

from keras.callbacks import LambdaCallback

from keras.callbacks import ModelCheckpoint

from keras.callbacks import ReduceLROnPlateau

import random

import sysPython3

# Changing the working location to the location of the text file

cd C:\Users\Dev\Desktop\Kaggle\New York Times

# Reading the text file into a string

with open('article1.txt', 'r') as file:

text = file.read()

# A preview of the text file

print(text)Python3

# Storing all the unique characters present in the text

vocabulary = sorted(list(set(text)))

# Creating dictionaries to map each character to an index

char_to_indices = dict((c, i) for i, c in enumerate(vocabulary))

indices_to_char = dict((i, c) for i, c in enumerate(vocabulary))

print(vocabulary)Python3

# Dividing the text into subsequences of length max_length

# So that at each time step the next max_length characters

# are fed into the network

max_length = 100

steps = 5

sentences = []

next_chars = []

for i in range(0, len(text) - max_length, steps):

sentences.append(text[i: i + max_length])

next_chars.append(text[i + max_length])

# Hot encoding each character into a boolean vector

X = np.zeros((len(sentences), max_length, len(vocabulary)), dtype = np.bool)

y = np.zeros((len(sentences), len(vocabulary)), dtype = np.bool)

for i, sentence in enumerate(sentences):

for t, char in enumerate(sentence):

X[i, t, char_to_indices[char]] = 1

y[i, char_to_indices[next_chars[i]]] = 1Python3

# Building the LSTM network for the task

model = Sequential()

model.add(LSTM(128, input_shape =(max_length, len(vocabulary))))

model.add(Dense(len(vocabulary)))

model.add(Activation('softmax'))

optimizer = RMSprop(lr = 0.01)

model.compile(loss ='categorical_crossentropy', optimizer = optimizer)Python3

# Helper function to sample an index from a probability array

def sample_index(preds, temperature = 1.0):

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)Python3

# Helper function to generate text after the end of each epoch

def on_epoch_end(epoch, logs):

print()

print('----- Generating text after Epoch: % d' % epoch)

start_index = random.randint(0, len(text) - max_length - 1)

for diversity in [0.2, 0.5, 1.0, 1.2]:

print('----- diversity:', diversity)

generated = ''

sentence = text[start_index: start_index + max_length]

generated += sentence

print('----- Generating with seed: "' + sentence + '"')

sys.stdout.write(generated)

for i in range(400):

x_pred = np.zeros((1, max_length, len(vocabulary)))

for t, char in enumerate(sentence):

x_pred[0, t, char_to_indices[char]] = 1.

preds = model.predict(x_pred, verbose = 0)[0]

next_index = sample_index(preds, diversity)

next_char = indices_to_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

sys.stdout.write(next_char)

sys.stdout.flush()

print()

print_callback = LambdaCallback(on_epoch_end = on_epoch_end)Python3

# Defining a helper function to save the model after each epoch

# in which the loss decreases

filepath = "weights.hdf5"

checkpoint = ModelCheckpoint(filepath, monitor ='loss',

verbose = 1, save_best_only = True,

mode ='min')Python3

# Defining a helper function to reduce the learning rate each time

# the learning plateaus

reduce_alpha = ReduceLROnPlateau(monitor ='loss', factor = 0.2,

patience = 1, min_lr = 0.001)

callbacks = [print_callback, checkpoint, reduce_alpha]Python3

# Training the LSTM model

model.fit(X, y, batch_size = 128, epochs = 500, callbacks = callbacks)Python3

# Defining a utility function to generate new and random text based on the

# network's learnings

def generate_text(length, diversity):

# Get random starting text

start_index = random.randint(0, len(text) - max_length - 1)

generated = ''

sentence = text[start_index: start_index + max_length]

generated += sentence

for i in range(length):

x_pred = np.zeros((1, max_length, len(vocabulary)))

for t, char in enumerate(sentence):

x_pred[0, t, char_to_indices[char]] = 1.

preds = model.predict(x_pred, verbose = 0)[0]

next_index = sample_index(preds, diversity)

next_char = indices_to_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

return generated

print(generate_text(500, 0.2))步骤 2:将数据加载到字符串中

Python3

# Changing the working location to the location of the text file

cd C:\Users\Dev\Desktop\Kaggle\New York Times

# Reading the text file into a string

with open('article1.txt', 'r') as file:

text = file.read()

# A preview of the text file

print(text)

第 3 步:创建从文本中的每个唯一字符到唯一编号的映射

Python3

# Storing all the unique characters present in the text

vocabulary = sorted(list(set(text)))

# Creating dictionaries to map each character to an index

char_to_indices = dict((c, i) for i, c in enumerate(vocabulary))

indices_to_char = dict((i, c) for i, c in enumerate(vocabulary))

print(vocabulary)

第 4 步:预处理数据

Python3

# Dividing the text into subsequences of length max_length

# So that at each time step the next max_length characters

# are fed into the network

max_length = 100

steps = 5

sentences = []

next_chars = []

for i in range(0, len(text) - max_length, steps):

sentences.append(text[i: i + max_length])

next_chars.append(text[i + max_length])

# Hot encoding each character into a boolean vector

X = np.zeros((len(sentences), max_length, len(vocabulary)), dtype = np.bool)

y = np.zeros((len(sentences), len(vocabulary)), dtype = np.bool)

for i, sentence in enumerate(sentences):

for t, char in enumerate(sentence):

X[i, t, char_to_indices[char]] = 1

y[i, char_to_indices[next_chars[i]]] = 1

第 5 步:构建 LSTM 网络

Python3

# Building the LSTM network for the task

model = Sequential()

model.add(LSTM(128, input_shape =(max_length, len(vocabulary))))

model.add(Dense(len(vocabulary)))

model.add(Activation('softmax'))

optimizer = RMSprop(lr = 0.01)

model.compile(loss ='categorical_crossentropy', optimizer = optimizer)

第 6 步:定义将在网络训练期间使用的一些辅助函数

请注意,下面给出的前两个函数是从 Keras 团队的官方文本生成示例的文档中引用的。

a)辅助函数来采样下一个字符:

Python3

# Helper function to sample an index from a probability array

def sample_index(preds, temperature = 1.0):

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

b)在每个 epoch 之后生成文本的辅助函数

Python3

# Helper function to generate text after the end of each epoch

def on_epoch_end(epoch, logs):

print()

print('----- Generating text after Epoch: % d' % epoch)

start_index = random.randint(0, len(text) - max_length - 1)

for diversity in [0.2, 0.5, 1.0, 1.2]:

print('----- diversity:', diversity)

generated = ''

sentence = text[start_index: start_index + max_length]

generated += sentence

print('----- Generating with seed: "' + sentence + '"')

sys.stdout.write(generated)

for i in range(400):

x_pred = np.zeros((1, max_length, len(vocabulary)))

for t, char in enumerate(sentence):

x_pred[0, t, char_to_indices[char]] = 1.

preds = model.predict(x_pred, verbose = 0)[0]

next_index = sample_index(preds, diversity)

next_char = indices_to_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

sys.stdout.write(next_char)

sys.stdout.flush()

print()

print_callback = LambdaCallback(on_epoch_end = on_epoch_end)

c)在损失减少的每个 epoch 之后保存模型的辅助函数

Python3

# Defining a helper function to save the model after each epoch

# in which the loss decreases

filepath = "weights.hdf5"

checkpoint = ModelCheckpoint(filepath, monitor ='loss',

verbose = 1, save_best_only = True,

mode ='min')

d)每次学习平台期降低学习率的辅助函数

Python3

# Defining a helper function to reduce the learning rate each time

# the learning plateaus

reduce_alpha = ReduceLROnPlateau(monitor ='loss', factor = 0.2,

patience = 1, min_lr = 0.001)

callbacks = [print_callback, checkpoint, reduce_alpha]

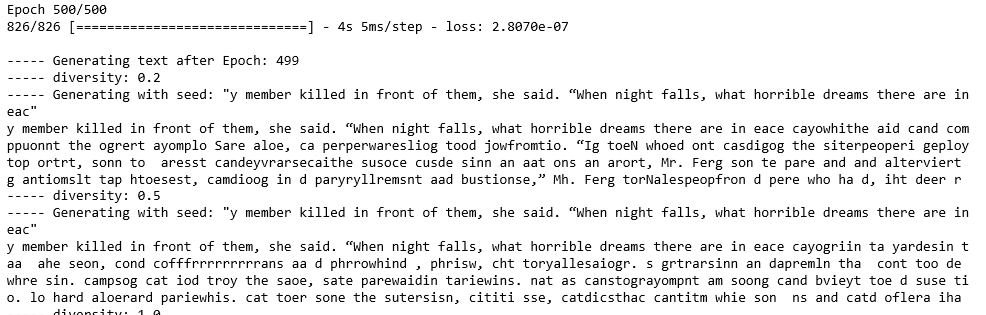

第 7 步:训练 LSTM 模型

Python3

# Training the LSTM model

model.fit(X, y, batch_size = 128, epochs = 500, callbacks = callbacks)

第 8 步:生成新的随机文本

Python3

# Defining a utility function to generate new and random text based on the

# network's learnings

def generate_text(length, diversity):

# Get random starting text

start_index = random.randint(0, len(text) - max_length - 1)

generated = ''

sentence = text[start_index: start_index + max_length]

generated += sentence

for i in range(length):

x_pred = np.zeros((1, max_length, len(vocabulary)))

for t, char in enumerate(sentence):

x_pred[0, t, char_to_indices[char]] = 1.

preds = model.predict(x_pred, verbose = 0)[0]

next_index = sample_index(preds, diversity)

next_char = indices_to_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

return generated

print(generate_text(500, 0.2))