在Python中将 PySpark DataFrame 转换为字典

在本文中,我们将看到如何将 PySpark 数据框转换为字典,其中键是列名,值是列值。

在开始之前,我们将创建一个示例数据框:

Python3

# Importing necessary libraries

from pyspark.sql import SparkSession

# Create a spark session

spark = SparkSession.builder.appName('DF_to_dict').getOrCreate()

# Create data in dataframe

data = [(('Ram'), '1991-04-01', 'M', 3000),

(('Mike'), '2000-05-19', 'M', 4000),

(('Rohini'), '1978-09-05', 'M', 4000),

(('Maria'), '1967-12-01', 'F', 4000),

(('Jenis'), '1980-02-17', 'F', 1200)]

# Column names in dataframe

columns = ["Name", "DOB", "Gender", "salary"]

# Create the spark dataframe

df = spark.createDataFrame(data=data,

schema=columns)

# Print the dataframe

df.show()Python3

# Declare an empty Dictionary

dict = {}

# Convert PySpark DataFrame to Pandas

# DataFrame

df = df.toPandas()

# Traverse through each column

for column in df.columns:

# Add key as column_name and

# value as list of column values

dict[column] = df[column].values.tolist()

# Print the dictionary

print(dict)Python3

import numpy as np

# Convert the dataframe into list

# of rows

rows = [list(row) for row in df.collect()]

# COnvert the list into numpy array

ar = np.array(rows)

# Declare an empty dictionary

dict = {}

# Get through each column

for i, column in enumerate(df.columns):

# Add ith column as values in dict

# with key as ith column_name

dict[column] = list(ar[:, i])

# Print the dictionary

print(dict)Python3

# COnvert PySpark dataframe to pandas

# dataframe

df = df.toPandas()

# Convert the dataframe into

# dictionary

dict = df.to_dict(orient = 'list')

# Print the dictionary

print(dict)Python3

# Importing necessary libraries

from pyspark.sql import SparkSession

# Create a spark session

spark = SparkSession.builder.appName('DF_to_dict').getOrCreate()

# Create data in dataframe

data = [(('Hyderabad'), 120000),

(('Delhi'), 124000),

(('Mumbai'), 344000),

(('Guntur'), 454000),

(('Bandra'), 111200)]

# Column names in dataframe

columns = ["Location", 'House_price']

# Create the spark dataframe

df = spark.createDataFrame(data=data, schema=columns)

# Print the dataframe

print('Dataframe : ')

df.show()

# COnvert PySpark dataframe to

# pandas dataframe

df = df.toPandas()

# Convert the dataframe into

# dictionary

dict = df.to_dict(orient='list')

# Print the dictionary

print('Dictionary :')

print(dict)输出 :

方法一:使用 df.toPandas()

使用 df.toPandas() 将 PySpark 数据框转换为 Pandas 数据框。

Syntax: DataFrame.toPandas()

Return type: Returns the pandas data frame having the same content as Pyspark Dataframe.

遍历每个列值并将值列表添加到以列名作为键的字典中。

蟒蛇3

# Declare an empty Dictionary

dict = {}

# Convert PySpark DataFrame to Pandas

# DataFrame

df = df.toPandas()

# Traverse through each column

for column in df.columns:

# Add key as column_name and

# value as list of column values

dict[column] = df[column].values.tolist()

# Print the dictionary

print(dict)

输出 :

{‘Name’: [‘Ram’, ‘Mike’, ‘Rohini’, ‘Maria’, ‘Jenis’],

‘DOB’: [‘1991-04-01’, ‘2000-05-19’, ‘1978-09-05’, ‘1967-12-01’, ‘1980-02-17’],

‘Gender’: [‘M’, ‘M’, ‘M’, ‘F’, ‘F’],

‘salary’: [3000, 4000, 4000, 4000, 1200]}

方法 2:使用 df.collect()

将 PySpark 数据框转换为行列表,并以列表形式返回一个数据框的所有记录。

Syntax: DataFrame.collect()

Return type: Returns all the records of the data frame as a list of rows.

蟒蛇3

import numpy as np

# Convert the dataframe into list

# of rows

rows = [list(row) for row in df.collect()]

# COnvert the list into numpy array

ar = np.array(rows)

# Declare an empty dictionary

dict = {}

# Get through each column

for i, column in enumerate(df.columns):

# Add ith column as values in dict

# with key as ith column_name

dict[column] = list(ar[:, i])

# Print the dictionary

print(dict)

输出 :

{‘Name’: [‘Ram’, ‘Mike’, ‘Rohini’, ‘Maria’, ‘Jenis’],

‘DOB’: [‘1991-04-01’, ‘2000-05-19’, ‘1978-09-05’, ‘1967-12-01’, ‘1980-02-17’],

‘Gender’: [‘M’, ‘M’, ‘M’, ‘F’, ‘F’],

‘salary’: [‘3000’, ‘4000’, ‘4000’, ‘4000’, ‘1200’]}

方法 3:使用 pandas.DataFrame.to_dict()

可以使用to_dict()方法直接将 Pandas 数据框转换为字典

Syntax: DataFrame.to_dict(orient=’dict’,)

Parameters:

- orient: Indicating the type of values of the dictionary. It takes values such as {‘dict’, ‘list’, ‘series’, ‘split’, ‘records’, ‘index’}

Return type: Returns the dictionary corresponding to the data frame.

代码:

蟒蛇3

# COnvert PySpark dataframe to pandas

# dataframe

df = df.toPandas()

# Convert the dataframe into

# dictionary

dict = df.to_dict(orient = 'list')

# Print the dictionary

print(dict)

输出 :

{‘Name’: [‘Ram’, ‘Mike’, ‘Rohini’, ‘Maria’, ‘Jenis’],

‘DOB’: [‘1991-04-01’, ‘2000-05-19’, ‘1978-09-05’, ‘1967-12-01’, ‘1980-02-17’],

‘Gender’: [‘M’, ‘M’, ‘M’, ‘F’, ‘F’],

‘salary’: [3000, 4000, 4000, 4000, 1200]}

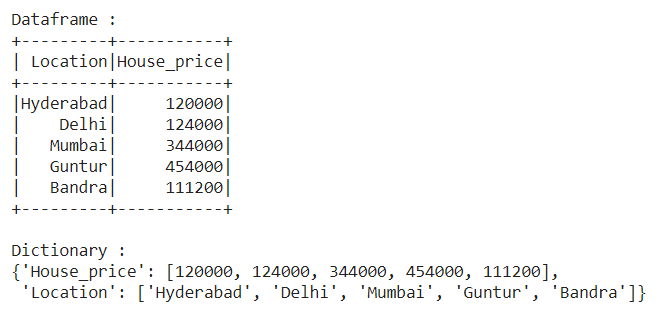

将具有 2 列的数据框转换为字典,创建一个具有2 列名为“位置”和“房屋价格”的数据框

蟒蛇3

# Importing necessary libraries

from pyspark.sql import SparkSession

# Create a spark session

spark = SparkSession.builder.appName('DF_to_dict').getOrCreate()

# Create data in dataframe

data = [(('Hyderabad'), 120000),

(('Delhi'), 124000),

(('Mumbai'), 344000),

(('Guntur'), 454000),

(('Bandra'), 111200)]

# Column names in dataframe

columns = ["Location", 'House_price']

# Create the spark dataframe

df = spark.createDataFrame(data=data, schema=columns)

# Print the dataframe

print('Dataframe : ')

df.show()

# COnvert PySpark dataframe to

# pandas dataframe

df = df.toPandas()

# Convert the dataframe into

# dictionary

dict = df.to_dict(orient='list')

# Print the dictionary

print('Dictionary :')

print(dict)

输出 :