重命名 PySpark DataFrames 聚合的列

在本文中,我们将讨论如何使用 Pyspark 重命名 PySpark 数据框聚合的列。

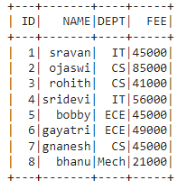

正在使用的数据框:

在 PySpark 中,groupBy() 用于将相同的数据收集到 PySpark DataFrame 上的组中,并对分组数据执行聚合函数。这些在功能模块中可用:

方法一:使用别名()

我们可以使用此方法更改聚合的列名。

语法:

dataframe.groupBy(‘column_name_group’).agg(aggregate_function(‘column_name’).alias(“new_column_name”))

在哪里,

- 数据框是输入数据框

- column_name_group 是分组列

- aggregate_function 是上述函数中的函数

- column_name 是执行聚合的列

- new_column_name 是 column_name 的新名称

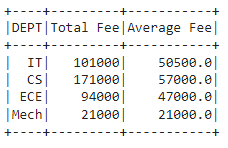

示例 1:通过将 FEE 列名称更改为 Total Fee 来使用 sum() 和 avg() 聚合 DEPT 列

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

#import functions

from pyspark.sql import functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of student data

data = [["1", "sravan", "IT", 45000],

["2", "ojaswi", "CS", 85000],

["3", "rohith", "CS", 41000],

["4", "sridevi", "IT", 56000],

["5", "bobby", "ECE", 45000],

["6", "gayatri", "ECE", 49000],

["7", "gnanesh", "CS", 45000],

["8", "bhanu", "Mech", 21000]

]

# specify column names

columns = ['ID', 'NAME', 'DEPT', 'FEE']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# aggregating DEPT column with sum() and avg()

# by changing FEE column name to Total Fee

dataframe.groupBy('DEPT').agg(functions.sum('FEE').alias(

"Total Fee"), functions.avg('FEE').alias("Average Fee")).show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

#import functions

from pyspark.sql import functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of student data

data = [["1", "sravan", "IT", 45000],

["2", "ojaswi", "CS", 85000],

["3", "rohith", "CS", 41000],

["4", "sridevi", "IT", 56000],

["5", "bobby", "ECE", 45000],

["6", "gayatri", "ECE", 49000],

["7", "gnanesh", "CS", 45000],

["8", "bhanu", "Mech", 21000]

]

# specify column names

columns = ['ID', 'NAME', 'DEPT', 'FEE']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# aggregating DEPT column with min(),count(),mean()

# and max() by changing FEE column name to Total Fee

dataframe.groupBy('DEPT').agg(functions.min('FEE').alias("Minimum Fee"),

functions.max('FEE').alias("Maximum Fee"),

functions.count('FEE').alias("No of Fee"),

functions.mean('FEE').alias("Average Fee")).show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

#import functions

from pyspark.sql import functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of student data

data = [["1", "sravan", "IT", 45000],

["2", "ojaswi", "CS", 85000],

["3", "rohith", "CS", 41000],

["4", "sridevi", "IT", 56000],

["5", "bobby", "ECE", 45000],

["6", "gayatri", "ECE", 49000],

["7", "gnanesh", "CS", 45000],

["8", "bhanu", "Mech", 21000]

]

# specify column names

columns = ['ID', 'NAME', 'DEPT', 'FEE']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# aggregating DEPT column with sum() FEE and rename to Total Fee

dataframe.groupBy("DEPT").agg({"FEE": "sum"}).withColumnRenamed(

"sum(FEE)", "Total Fee").show()输出:

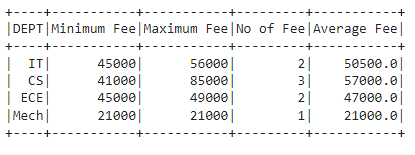

示例 2:通过将 FEE 列名称更改为 Total Fee 来聚合 DEPT 列与 min()、count()、mean() 和 max()

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

#import functions

from pyspark.sql import functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of student data

data = [["1", "sravan", "IT", 45000],

["2", "ojaswi", "CS", 85000],

["3", "rohith", "CS", 41000],

["4", "sridevi", "IT", 56000],

["5", "bobby", "ECE", 45000],

["6", "gayatri", "ECE", 49000],

["7", "gnanesh", "CS", 45000],

["8", "bhanu", "Mech", 21000]

]

# specify column names

columns = ['ID', 'NAME', 'DEPT', 'FEE']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# aggregating DEPT column with min(),count(),mean()

# and max() by changing FEE column name to Total Fee

dataframe.groupBy('DEPT').agg(functions.min('FEE').alias("Minimum Fee"),

functions.max('FEE').alias("Maximum Fee"),

functions.count('FEE').alias("No of Fee"),

functions.mean('FEE').alias("Average Fee")).show()

输出:

方法 2:使用 withColumnRenamed()

这需要一个结果聚合列名并重命名此列。聚合后,它将返回列名称为 aggregate_operation(old_column)

所以使用它我们可以用我们的新列替换它

语法:

dataframe.groupBy(“column_name_group”).agg({“column_name”:”aggregate_operation”}).withColumnRenamed(“aggregate_operation(column_name)”, “new_column_name”)

示例:使用 sum() FEE 聚合 DEPT 列并重命名为 Total Fee

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

#import functions

from pyspark.sql import functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of student data

data = [["1", "sravan", "IT", 45000],

["2", "ojaswi", "CS", 85000],

["3", "rohith", "CS", 41000],

["4", "sridevi", "IT", 56000],

["5", "bobby", "ECE", 45000],

["6", "gayatri", "ECE", 49000],

["7", "gnanesh", "CS", 45000],

["8", "bhanu", "Mech", 21000]

]

# specify column names

columns = ['ID', 'NAME', 'DEPT', 'FEE']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# aggregating DEPT column with sum() FEE and rename to Total Fee

dataframe.groupBy("DEPT").agg({"FEE": "sum"}).withColumnRenamed(

"sum(FEE)", "Total Fee").show()

输出: