Python – LRU 缓存

LRU Cache 是最近最少使用的缓存,主要用于内存组织。在这种情况下,元素以先进先出格式出现。我们得到了可以参考的总页码。我们还给出了缓存(或内存)大小(缓存一次可以保存的页帧数)。 LRU 缓存方案是在缓存已满并引用缓存中不存在的新页面时删除最近最少使用的帧。 LRU Cache 通常有两个术语,让我们看看它们 -

- 页面命中:如果在主存储器中找到所需的页面,则它是页面命中。

- 页面错误:如果在主存储器中找不到所需的页面,则发生页面错误。

当一个页面被引用时,需要的页面可能在内存中。如果它在内存中,我们需要将链表的节点分离出来,放到队列的最前面。

如果所需的页面不在内存中,我们将其带入内存。简单来说,就是在队列的最前面增加一个新节点,并更新哈希中对应的节点地址。如果队列已满,即所有帧都已满,我们从队列的尾部移除一个节点,并将新节点添加到队列的前端。

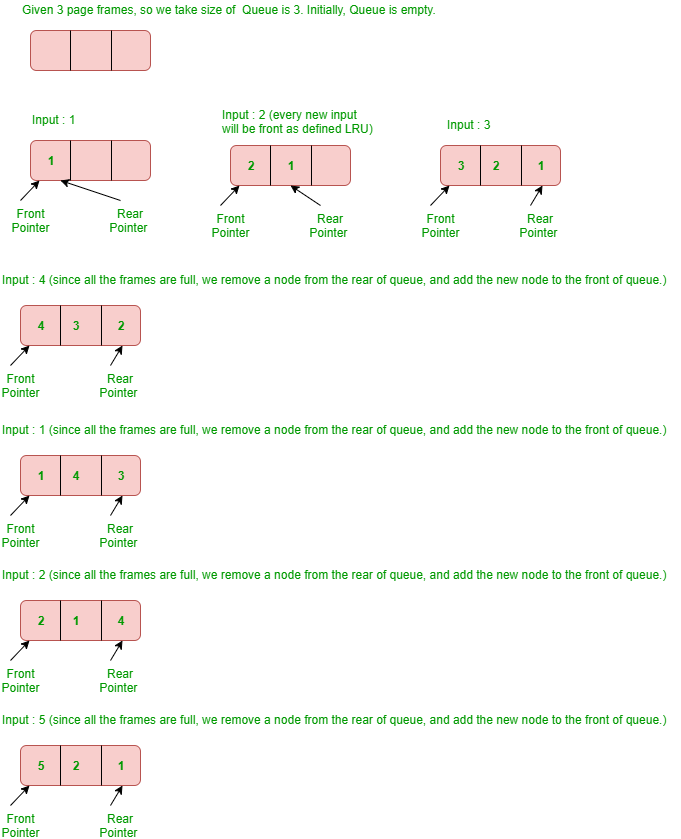

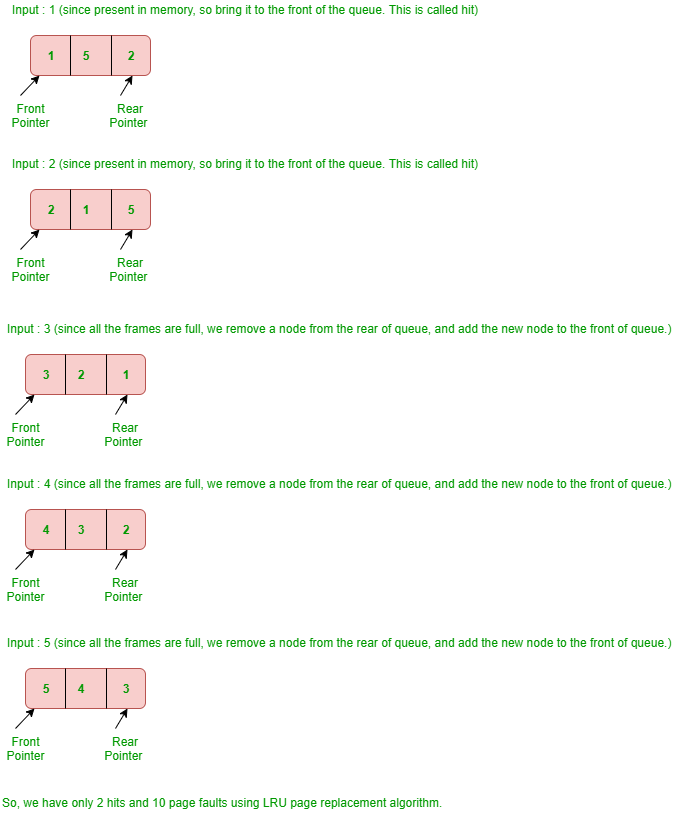

示例 –考虑以下参考字符串:

1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5使用具有 3 个页框的最近最少使用 (LRU) 页面替换算法查找页面错误数。

解释 -

使用Python的 LRU 缓存

您可以在队列的帮助下实现这一点。在此,我们使用了使用链表的队列。在 Pycharm IDE 中运行给定的代码。

import time

class Node:

# Nodes are represented in n

def __init__(self, key, val):

self.key = key

self.val = val

self.next = None

self.prev = None

class LRUCache:

cache_limit = None

# if the DEBUG is TRUE then it

# will execute

DEBUG = False

def __init__(self, func):

self.func = func

self.cache = {}

self.head = Node(0, 0)

self.tail = Node(0, 0)

self.head.next = self.tail

self.tail.prev = self.head

def __call__(self, *args, **kwargs):

# The cache presents with the help

# of Linked List

if args in self.cache:

self.llist(args)

if self.DEBUG == True:

return f'Cached...{args}\n{self.cache[args]}\nCache: {self.cache}'

return self.cache[args]

# The given cache keeps on moving.

if self.cache_limit is not None:

if len(self.cache) > self.cache_limit:

n = self.head.next

self._remove(n)

del self.cache[n.key]

# Compute and cache and node to see whether

# the following element is present or not

# based on the given input.

result = self.func(*args, **kwargs)

self.cache[args] = result

node = Node(args, result)

self._add(node)

if self.DEBUG == True:

return f'{result}\nCache: {self.cache}'

return result

# Remove from double linked-list - Node.

def _remove(self, node):

p = node.prev

n = node.next

p.next = n

n.prev = p

# Add to double linked-list - Node.

def _add(self, node):

p = self.tail.prev

p.next = node

self.tail.prev = node

node.prev = p

node.next = self.tail

# Over here the result task is being done

def llist(self, args):

current = self.head

while True:

if current.key == args:

node = current

self._remove(node)

self._add(node)

if self.DEBUG == True:

del self.cache[node.key]

self.cache[node.key] = node.val

break

else:

current = current.next

# Default Debugging is FALSE. For

# execution of DEBUG is set to TRUE

LRUCache.DEBUG = True

# The DEFAULT test limit is NONE.

LRUCache.cache_limit = 3

@LRUCache

def ex_func_01(n):

print(f'Computing...{n}')

time.sleep(1)

return n

print(f'\nFunction: ex_func_01')

print(ex_func_01(1))

print(ex_func_01(2))

print(ex_func_01(3))

print(ex_func_01(4))

print(ex_func_01(1))

print(ex_func_01(2))

print(ex_func_01(5))

print(ex_func_01(1))

print(ex_func_01(2))

print(ex_func_01(3))

print(ex_func_01(4))

print(ex_func_01(5))

输出:

Function: ex_func_01

Computing...1

1

Cache: {(1,): 1}

Computing...2

2

Cache: {(1,): 1, (2,): 2}

Computing...3

3

Cache: {(1,): 1, (2,): 2, (3,): 3}

Computing...4

4

Cache: {(1,): 1, (2,): 2, (3,): 3, (4,): 4}

Cached...(1,)

1

Cache: {(2,): 2, (3,): 3, (4,): 4, (1,): 1}

Cached...(2,)

2

Cache: {(3,): 3, (4,): 4, (1,): 1, (2,): 2}

Computing...5

5

Cache: {(4,): 4, (1,): 1, (2,): 2, (5,): 5}

Cached...(1,)

1

Cache: {(4,): 4, (2,): 2, (5,): 5, (1,): 1}

Cached...(2,)

2

Cache: {(4,): 4, (5,): 5, (1,): 1, (2,): 2}

Computing...3

3

Cache: {(5,): 5, (1,): 1, (2,): 2, (3,): 3}

Computing...4

4

Cache: {(1,): 1, (2,): 2, (3,): 3, (4,): 4}

Computing...5

5

Cache: {(2,): 2, (3,): 3, (4,): 4, (5,): 5}