毫升 |使用 Sklearn 实现 KNN 分类器

先决条件:K-最近邻算法

K-Nearest Neighbors 是机器学习中最基本但必不可少的分类算法之一。它属于监督学习领域,在模式识别、数据挖掘和入侵检测中得到广泛应用。它在现实生活场景中被广泛使用,因为它是非参数的,也就是说,它不对数据的分布做出任何潜在的假设(与 GMM 等其他算法相反,它假设给定数据的高斯分布) .

本文将演示如何使用Python的 Sklearn 库实现K-最近邻分类器算法。

第 1 步:导入所需的库

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

import matplotlib.pyplot as plt

import seaborn as sns

第 2 步:读取数据集

cd C:\Users\Dev\Desktop\Kaggle\Breast_Cancer

# Changing the read file location to the location of the file

df = pd.read_csv('data.csv')

y = df['diagnosis']

X = df.drop('diagnosis', axis = 1)

X = X.drop('Unnamed: 32', axis = 1)

X = X.drop('id', axis = 1)

# Separating the dependent and independent variable

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size = 0.3, random_state = 0)

# Splitting the data into training and testing data

第 3 步:训练模型

K = []

training = []

test = []

scores = {}

for k in range(2, 21):

clf = KNeighborsClassifier(n_neighbors = k)

clf.fit(X_train, y_train)

training_score = clf.score(X_train, y_train)

test_score = clf.score(X_test, y_test)

K.append(k)

training.append(training_score)

test.append(test_score)

scores[k] = [training_score, test_score]

第 4 步:评估模型

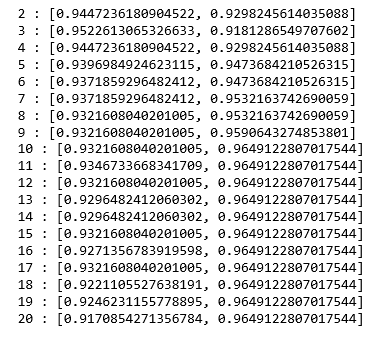

for keys, values in scores.items():

print(keys, ':', values)

我们现在尝试找到“k”的最佳值,即最近邻居的数量。

我们现在尝试找到“k”的最佳值,即最近邻居的数量。

第 5 步:绘制训练和测试分数图

ax = sns.stripplot(K, training);

ax.set(xlabel ='values of k', ylabel ='Training Score')

plt.show()

# function to show plot

ax = sns.stripplot(K, test);

ax.set(xlabel ='values of k', ylabel ='Test Score')

plt.show()

plt.scatter(K, training, color ='k')

plt.scatter(K, test, color ='g')

plt.show()

# For overlapping scatter plots

从上面的散点图中,我们可以得出 k 的最佳值将在 5 左右的结论。