在Python中对服装图像进行分类

从在 Facebook、Instagram 上挑选个人资料照片到在 Myntra、Amazon、Flipkart 等购物应用程序中对衣服图像进行分类,社交媒体图像分类无处不在。分类已成为任何电子商务平台不可或缺的一部分。分类还用于识别法律和社交网络中的犯罪面孔。

在本文中,我们将学习如何在Python中对图像进行分类。分类服装图像是机器学习中图像分类的一个例子,这意味着将图像分类到它们各自的类别中。为了获取服装图像,我们将使用TensorFlow附带的fashion_mnist数据集。该数据集包含 10 个不同类别的服装图像。它是由手写数字组成的初学者 MNIST 数据集的替代品。随着我们的进行,我们将进一步了解它。

逐步实施

第 1 步:导入分类所需的库

- TensorFlow :使用Python开发和训练模型

- NumPy :用于数组操作

- Matplotlib:用于数据可视化

Python3

# importing the necessary libraries

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as pltPython3

# storing the dataset path

clothing_fashion_mnist = tf.keras.datasets.fashion_mnist

# loading the dataset from tensorflow

(x_train, y_train),

(x_test, y_test) = clothing_fashion_mnist.load_data()

# displaying the shapes of training and testing dataset

print('Shape of training cloth images: ',

x_train.shape)

print('Shape of training label: ',

y_train.shape)

print('Shape of test cloth images: ',

x_test.shape)

print('Shape of test labels: ',

y_test.shape)Python3

# storing the class names as it is

# not provided in the dataset

label_class_names = ['T-shirt/top', 'Trouser',

'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker',

'Bag', 'Ankle boot']

# display the first images

plt.imshow(x_train[0])

plt.colorbar() # to display the colourbar

plt.show()Python3

x_train = x_train / 255.0 # normalizing the training data

x_test = x_test / 255.0 # normalizing the testing dataPython3

plt.figure(figsize=(15, 5)) # figure size

i = 0

while i < 20:

plt.subplot(2, 10, i+1)

# showing each image with colourmap as binary

plt.imshow(x_train[i], cmap=plt.cm.binary)

# giving class labels

plt.xlabel(label_class_names[y_train[i]])

i = i+1

plt.show() # plotting the final output figurePython3

# Building the model

model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10)

])Python3

# compiling the model

cloth_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=True),

metrics=['accuracy'])Python3

# Fitting the model to the training data

cloth_model.fit(x_train, y_train, epochs=10)Python3

# calculating loss and accuracy score

test_loss, test_acc = cloth_model.evaluate(x_test,

y_test,

verbose=2)

print('\nTest loss:', test_loss)

print('\nTest accuracy:', test_acc)Python3

# using Softmax() function to convert

# linear output logits to probability

prediction_model = tf.keras.Sequential(

[cloth_model, tf.keras.layers.Softmax()])

# feeding the testing data to the probability

# prediction model

prediction = prediction_model.predict(x_test)

# predicted class label

print('Predicted test label:', np.argmax(prediction[0]))

# predicted class label name

print(label_class_names[np.argmax(prediction[0])])

# actual class label

print('Actual test label:', y_test[0])Python3

# assigning the figure size

plt.figure(figsize=(15, 6))

i = 0

# plotting total 24 images by iterating through it

while i < 24:

image, actual_label = x_test[i], y_test[i]

predicted_label = np.argmax(prediction[i])

plt.subplot(3, 8, i+1)

plt.tight_layout()

plt.xticks([])

plt.yticks([])

# display plot

plt.imshow(image)

# if else condition to distinguish right and

# wrong

color, label = ('green', 'Correct Prediction')

if predicted_label == actual_label else (

'red', 'Incorrect Prediction')

# plotting labels and giving color to it

# according to its correctness

plt.title(label, color=color)

# labelling the images in x-axis to see

# the correct and incorrect results

plt.xlabel(" {} ~ {} ".format(

label_class_names[actual_label],

label_class_names[predicted_label]))

# labelling the images orderwise in y-axis

plt.ylabel(i)

# incrementing counter variable

i += 1第 2 步:加载和探索数据

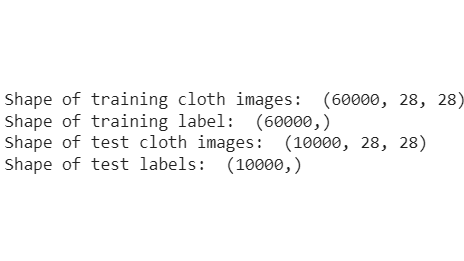

然后我们加载fashion_mnist数据集,我们会看到训练和测试数据的形状。很明显,有60,000 张训练图像用于训练数据, 10,000 张测试图像用于在模型上进行测试。它总共包含十个类别的 70,000 张图像,即“T 恤/上衣”、“裤子”、“套头衫”、“连衣裙”、“外套”、“凉鞋”、“衬衫”、“运动鞋”、“包”、 '脚踝靴'。

Python3

# storing the dataset path

clothing_fashion_mnist = tf.keras.datasets.fashion_mnist

# loading the dataset from tensorflow

(x_train, y_train),

(x_test, y_test) = clothing_fashion_mnist.load_data()

# displaying the shapes of training and testing dataset

print('Shape of training cloth images: ',

x_train.shape)

print('Shape of training label: ',

y_train.shape)

print('Shape of test cloth images: ',

x_test.shape)

print('Shape of test labels: ',

y_test.shape)

输出:

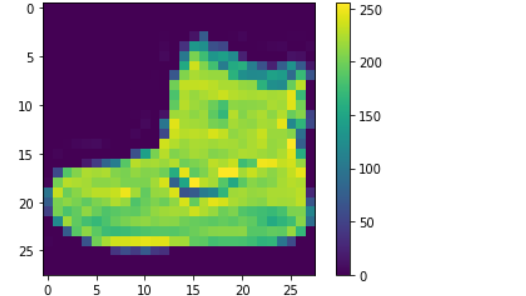

标签由一个范围从 0 到 9 的整数数组组成。由于类名没有添加到fashion_mnist数据集中,因此我们将实际的类名存储在一个变量中,以便以后用于数据可视化。从输出中,我们可以看到像素值落在 0 到 255 的范围内。

Python3

# storing the class names as it is

# not provided in the dataset

label_class_names = ['T-shirt/top', 'Trouser',

'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker',

'Bag', 'Ankle boot']

# display the first images

plt.imshow(x_train[0])

plt.colorbar() # to display the colourbar

plt.show()

输出:

第 3 步:预处理数据

下面的代码对数据进行了归一化,我们可以看到像素值在 0 到 255 的范围内。因此,我们需要将每个像素除以 255 以将值缩放到 0 和 1 之间。

Python3

x_train = x_train / 255.0 # normalizing the training data

x_test = x_test / 255.0 # normalizing the testing data

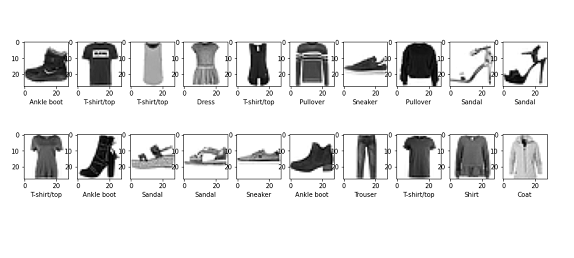

第 4 步:数据可视化

下面的代码显示了前20 个带有类标签的服装图像,确保我们朝着正确的方向构建模型。在这里,我们将带有颜色图的x_train绘制为二进制,并从我们之前存储的label_class_names数组中添加了每个类的名称。

Python3

plt.figure(figsize=(15, 5)) # figure size

i = 0

while i < 20:

plt.subplot(2, 10, i+1)

# showing each image with colourmap as binary

plt.imshow(x_train[i], cmap=plt.cm.binary)

# giving class labels

plt.xlabel(label_class_names[y_train[i]])

i = i+1

plt.show() # plotting the final output figure

输出:

第 5 步:构建模型

在这里,我们通过创建神经网络层来构建我们的模型。 tf.keras.layers.Flatten() 将图像从二维数组转换为一维数组,并且 tf.keras.layers.Dense 具有在训练阶段学习的某些参数。

Python3

# Building the model

model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10)

])

第 6 步:编译模型

在这里,我们使用adam 优化器编译模型,将SparseCategoricalCrossentropy作为损失函数, 和metrics=['accuracy'] 。

Python3

# compiling the model

cloth_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=True),

metrics=['accuracy'])

第 7 步:在构建的模型上训练数据

现在我们将x_train、y_train即训练数据输入到我们已经编译的模型中。 model.fit()方法有助于将训练数据拟合到我们的模型中。

Python3

# Fitting the model to the training data

cloth_model.fit(x_train, y_train, epochs=10)

输出:

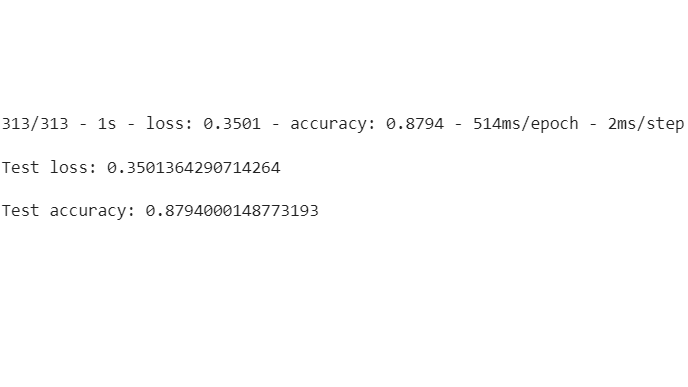

第 8 步:评估模型损失和准确性

在这里,我们将通过计算模型损失和准确率来了解我们的模型有多好。从输出中,我们可以看到测试数据的准确度得分低于训练数据的准确度得分。所以这是一个过拟合的模型。

Python3

# calculating loss and accuracy score

test_loss, test_acc = cloth_model.evaluate(x_test,

y_test,

verbose=2)

print('\nTest loss:', test_loss)

print('\nTest accuracy:', test_acc)

输出

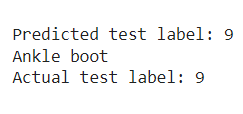

第 9 步:使用测试数据对经过训练的模型进行预测

现在我们可以使用测试数据集对构建的模型进行预测。我们尝试使用predictions[0]预测第一个测试图像,即x_test[0 ],结果是测试标签9 ,即Ankle boot。我们添加了 Softmax()函数来将线性输出 logits 转换为概率,因为它更容易计算

Python3

# using Softmax() function to convert

# linear output logits to probability

prediction_model = tf.keras.Sequential(

[cloth_model, tf.keras.layers.Softmax()])

# feeding the testing data to the probability

# prediction model

prediction = prediction_model.predict(x_test)

# predicted class label

print('Predicted test label:', np.argmax(prediction[0]))

# predicted class label name

print(label_class_names[np.argmax(prediction[0])])

# actual class label

print('Actual test label:', y_test[0])

输出:

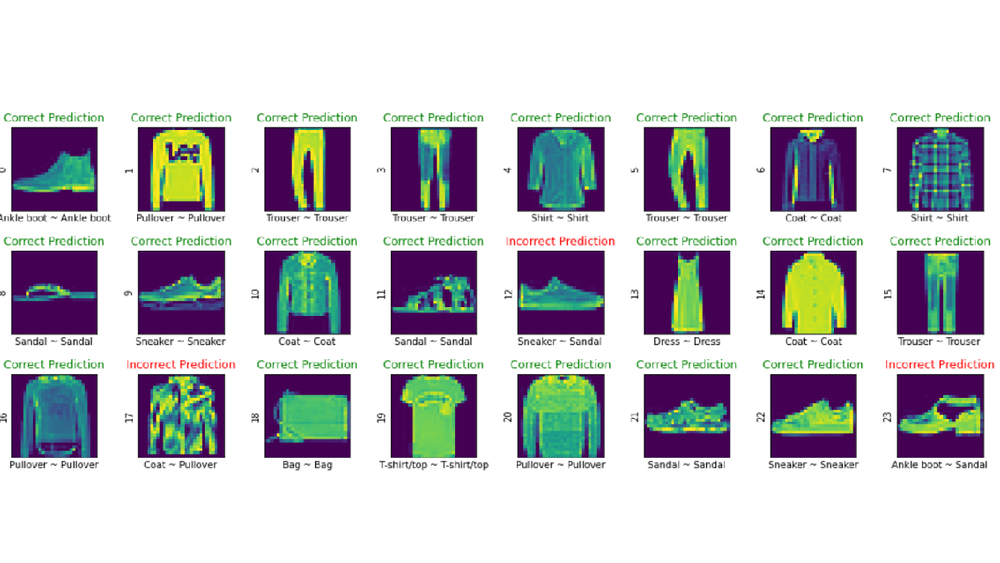

第 10 步:预测与实际测试标签的数据可视化

最后,我们将可视化我们的前 24 张预测图像与实际类标签,看看我们的模型有多好。

Python3

# assigning the figure size

plt.figure(figsize=(15, 6))

i = 0

# plotting total 24 images by iterating through it

while i < 24:

image, actual_label = x_test[i], y_test[i]

predicted_label = np.argmax(prediction[i])

plt.subplot(3, 8, i+1)

plt.tight_layout()

plt.xticks([])

plt.yticks([])

# display plot

plt.imshow(image)

# if else condition to distinguish right and

# wrong

color, label = ('green', 'Correct Prediction')

if predicted_label == actual_label else (

'red', 'Incorrect Prediction')

# plotting labels and giving color to it

# according to its correctness

plt.title(label, color=color)

# labelling the images in x-axis to see

# the correct and incorrect results

plt.xlabel(" {} ~ {} ".format(

label_class_names[actual_label],

label_class_names[predicted_label]))

# labelling the images orderwise in y-axis

plt.ylabel(i)

# incrementing counter variable

i += 1

输出:

我们可以清楚地看到,第 12、第 17 和第 23 个预测被错误分类,但其余的都是正确的。由于实际上没有分类模型可以 100% 正确,这是我们构建的一个非常好的模型。