2位二进制输入的XNOR逻辑门感知器算法的实现

在机器学习领域,感知器是一种用于二元分类器的监督学习算法。感知器模型实现以下函数:

![由 QuickLaTeX.com 渲染 \[ \begin{array}{c} \hat{y}=\Theta\left(w_{1} x_{1}+w_{2} x_{2}+\ldots+w_{n} x_{n}+b\right) \\ =\Theta(\mathbf{w} \cdot \mathbf{x}+b) \\ \text { where } \Theta(v)=\left\{\begin{array}{cc} 1 & \text { if } v \geqslant 0 \\ 0 & \text { otherwise } \end{array}\right. \end{array} \]](https://mangodoc.oss-cn-beijing.aliyuncs.com/geek8geeks/Implementation_of_Perceptron_Algorithm_for_XNOR_Logic_Gate_with_2-bit_Binary_Input_0.png)

对于权重向量的特定选择![]() 和偏置参数

和偏置参数![]() ,模型预测输出

,模型预测输出![]() 对于相应的输入向量

对于相应的输入向量![]() .

.

2位二进制变量的XNOR逻辑函数真值表,即输入向量![]() 和相应的输出

和相应的输出![]() –

–

| 0 | 0 | 1 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

我们可以观察到, ![]()

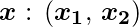

设计感知器网络:

- Step1:现在为对应的权重向量

输入向量的

输入向量的 到 OR 和 AND 节点,相关的感知函数可以定义为:

到 OR 和 AND 节点,相关的感知函数可以定义为: ![由 QuickLaTeX.com 渲染 \[$\boldsymbol{\hat{y}_{1}} = \Theta\left(w_{1} x_{1}+w_{2} x_{2}+b_{OR}\right)$ \]](https://mangodoc.oss-cn-beijing.aliyuncs.com/geek8geeks/Implementation_of_Perceptron_Algorithm_for_XNOR_Logic_Gate_with_2-bit_Binary_Input_13.png)

![由 QuickLaTeX.com 渲染 \[$\boldsymbol{\hat{y}_{2}} = \Theta\left(w_{1} x_{1}+w_{2} x_{2}+b_{AND}\right)$ \]](https://mangodoc.oss-cn-beijing.aliyuncs.com/geek8geeks/Implementation_of_Perceptron_Algorithm_for_XNOR_Logic_Gate_with_2-bit_Binary_Input_14.png)

- 第二步:输出

来自 OR 节点的将被输入到具有权重的 NOT 节点

来自 OR 节点的将被输入到具有权重的 NOT 节点 并且相关的感知函数可以定义为:

并且相关的感知函数可以定义为: ![由 QuickLaTeX.com 渲染 \[$\boldsymbol{\hat{y}_{3}} = \Theta\left(w_{NOT} \boldsymbol{\hat{y}_{1}}+b_{NOT}\right)$\]](https://mangodoc.oss-cn-beijing.aliyuncs.com/geek8geeks/Implementation_of_Perceptron_Algorithm_for_XNOR_Logic_Gate_with_2-bit_Binary_Input_17.png)

- 第三步:输出

从 AND 节点和输出

从 AND 节点和输出 Step2中提到的来自NOT节点将被输入到具有权重的OR节点

Step2中提到的来自NOT节点将被输入到具有权重的OR节点 .然后对应的输出

.然后对应的输出 是 XNOR 逻辑函数的最终输出。相关的感知函数可以定义为:

是 XNOR 逻辑函数的最终输出。相关的感知函数可以定义为: ![由 QuickLaTeX.com 渲染 \[$\boldsymbol{\hat{y}} = \Theta\left(w_{OR1} \boldsymbol{\hat{y}_{3}}+w_{OR2} \boldsymbol{\hat{y}_{2}}+b_{OR}\right)$\]](https://mangodoc.oss-cn-beijing.aliyuncs.com/geek8geeks/Implementation_of_Perceptron_Algorithm_for_XNOR_Logic_Gate_with_2-bit_Binary_Input_22.png)

为了实现,权重参数被认为是![]() 和偏置参数是

和偏置参数是![]() .

.

Python实现:

# importing Python library

import numpy as np

# define Unit Step Function

def unitStep(v):

if v >= 0:

return 1

else:

return 0

# design Perceptron Model

def perceptronModel(x, w, b):

v = np.dot(w, x) + b

y = unitStep(v)

return y

# NOT Logic Function

# wNOT = -1, bNOT = 0.5

def NOT_logicFunction(x):

wNOT = -1

bNOT = 0.5

return perceptronModel(x, wNOT, bNOT)

# AND Logic Function

# w1 = 1, w2 = 1, bAND = -1.5

def AND_logicFunction(x):

w = np.array([1, 1])

bAND = -1.5

return perceptronModel(x, w, bAND)

# OR Logic Function

# here w1 = wOR1 = 1,

# w2 = wOR2 = 1, bOR = -0.5

def OR_logicFunction(x):

w = np.array([1, 1])

bOR = -0.5

return perceptronModel(x, w, bOR)

# XNOR Logic Function

# with AND, OR and NOT

# function calls in sequence

def XNOR_logicFunction(x):

y1 = OR_logicFunction(x)

y2 = AND_logicFunction(x)

y3 = NOT_logicFunction(y1)

final_x = np.array([y2, y3])

finalOutput = OR_logicFunction(final_x)

return finalOutput

# testing the Perceptron Model

test1 = np.array([0, 1])

test2 = np.array([1, 1])

test3 = np.array([0, 0])

test4 = np.array([1, 0])

print("XNOR({}, {}) = {}".format(0, 1, XNOR_logicFunction(test1)))

print("XNOR({}, {}) = {}".format(1, 1, XNOR_logicFunction(test2)))

print("XNOR({}, {}) = {}".format(0, 0, XNOR_logicFunction(test3)))

print("XNOR({}, {}) = {}".format(1, 0, XNOR_logicFunction(test4)))

输出:

XNOR(0, 1) = 0

XNOR(1, 1) = 1

XNOR(0, 0) = 1

XNOR(1, 0) = 0

这里,模型预测输出( ![]() ) 每个测试输入都与 XNOR 逻辑门常规输出 (

) 每个测试输入都与 XNOR 逻辑门常规输出 ( ![]() ) 根据真值表。

) 根据真值表。

因此,验证了 XNOR 逻辑门的感知器算法是正确实现的。