📌 相关文章

- 在Python使用NLTK删除停用词(1)

- 使用 nltk 删除停用词 - Python (1)

- 在Python使用NLTK删除停用词

- 使用 nltk 删除停用词 - Python 代码示例

- nltk 停用词 - Python (1)

- nltk 停用词 - Python 代码示例

- 下载停用词 nltk - Python (1)

- 下载停用词 nltk - Python 代码示例

- 下载停用词 nltk (1)

- 下载停用词 nltk - 任何代码示例

- Python删除停用词(1)

- 删除python中的停用词(1)

- 删除停用词 - Python (1)

- Python删除停用词

- 删除python代码示例中的停用词

- 删除停用词 - Python 代码示例

- 在Python中使用NLTK对停用词进行语音标记

- 在Python中使用NLTK对停用词进行语音标记(1)

- 删除停用词 python - 任何代码示例

- 在Python中使用 NLTK 使用停用词进行部分语音标记(1)

- 在Python中使用 NLTK 使用停用词进行部分语音标记

- 从文本中删除停用词 - Python 代码示例

- 从字符串列表中删除停用词 python (1)

- 从字符串列表中删除停用词 python 代码示例

- python中的nltk(1)

- nltk python (1)

- 从 nltk.corpus 导入停用词错误 (1)

- nltk python 代码示例

- python代码示例中的nltk

📜 在Python中使用NLTK删除停用词

📅 最后修改于: 2020-04-26 10:24:53 🧑 作者: Mango

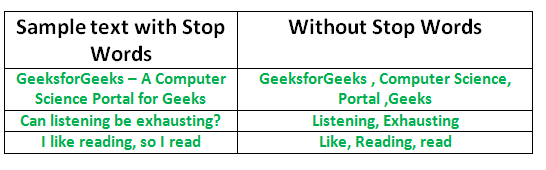

将数据转换为计算机可以理解的内容的过程称为预处理。预处理的主要形式之一是过滤掉无用的数据。在自然语言处理中,无用的单词(数据)称为停用词。

什么是停用词?

停用词:停用词是在为索引条目进行搜索或检索时被搜索引擎忽略的常用词(例如“ the”,“ a”,“ an”,“ in”)搜索查询的结果。

我们不希望这些单词占用我们数据库中的空间或占用宝贵的处理时间。为此,我们可以通过存储您认为是停用词的单词列表来轻松删除它们。Python中的NLTK(自然语言工具包)具有以16种不同语言存储的停用词列表。您可以在nltk_data目录中找到它们。home / pratima / nltk_data / corpora / stopwords是目录地址。(不要忘记更改您的主目录名称),

要检查停用词列表,您可以在Python shell中键入以下命令。

import nltk

from nltk.corpus import stopwords

set(stopwords.words('english'))输出:

{‘ourselves’, ‘hers’, ‘between’, ‘yourself’, ‘but’, ‘again’, ‘there’, ‘about’, ‘once’, ‘during’, ‘out’, ‘very’, ‘having’, ‘with’, ‘they’, ‘own’, ‘an’, ‘be’, ‘some’, ‘for’, ‘do’, ‘its’, ‘yours’, ‘such’, ‘into’, ‘of’, ‘most’, ‘itself’, ‘other’, ‘off’, ‘is’, ‘s’, ‘am’, ‘or’, ‘who’, ‘as’, ‘from’, ‘him’, ‘each’, ‘the’, ‘themselves’, ‘until’, ‘below’, ‘are’, ‘we’, ‘these’, ‘your’, ‘his’, ‘through’, ‘don’, ‘nor’, ‘me’, ‘were’, ‘her’, ‘more’, ‘himself’, ‘this’, ‘down’, ‘should’, ‘our’, ‘their’, ‘while’, ‘above’, ‘both’, ‘up’, ‘to’, ‘ours’, ‘had’, ‘she’, ‘all’, ‘no’, ‘when’, ‘at’, ‘any’, ‘before’, ‘them’, ‘same’, ‘and’, ‘been’, ‘have’, ‘in’, ‘will’, ‘on’, ‘does’, ‘yourselves’, ‘then’, ‘that’, ‘because’, ‘what’, ‘over’, ‘why’, ‘so’, ‘can’, ‘did’, ‘not’, ‘now’, ‘under’, ‘he’, ‘you’, ‘herself’, ‘has’, ‘just’, ‘where’, ‘too’, ‘only’, ‘myself’, ‘which’, ‘those’, ‘i’, ‘after’, ‘few’, ‘whom’, ‘t’, ‘being’, ‘if’, ‘theirs’, ‘my’, ‘against’, ‘a’, ‘by’, ‘doing’, ‘it’, ‘how’, ‘further’, ‘was’, ‘here’, ‘than’}注意:您甚至可以通过在english.txt中添加您选择的单词来修改停用词目录中的文件。

使用NLTK删除停用词

下面的程序从一段文本中删除停用词:

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

example_sent = "This is a sample sentence, showing off the stop words filtration."

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(example_sent)

filtered_sentence = [w for w in word_tokens if not w in stop_words]

filtered_sentence = []

for w in word_tokens:

if w not in stop_words:

filtered_sentence.append(w)

print(word_tokens)

print(filtered_sentence)输出:

['This', 'is', 'a', 'sample', 'sentence', ',', 'showing',

'off', 'the', 'stop', 'words', 'filtration', '.']

['This', 'sample', 'sentence', ',', 'showing', 'stop',

'words', 'filtration', '.']在文件中执行停用词操作

在下面的代码中,text.txt是原始输入文件,其中将删除停用词。filteredtext.txt是输出文件。可以使用以下代码完成:

import io

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

#word_tokenize接受字符串作为输入,而不是文件.

stop_words = set(stopwords.words('english'))

file1 = open("text.txt")

line = file1.read()# Use this to read file content as a stream:

words = line.split()

for r in words:

if not r in stop_words:

appendFile = open('filteredtext.txt','a')

appendFile.write(" "+r)

appendFile.close()这就是我们通过删除不会对将来的操作产生影响的词来提高处理后的内容的效率。